I’ve spent the last eight years building data pipelines on Google Cloud Platform (GCP), working with everything from tiny startups to enterprises processing billions of events daily. I’ve made mistakes, learned hard lessons, and discovered what actually matters when building a career in this field.

This data engineering roadmap isn’t theoretical—it’s battle-tested. Whether you’re a software developer looking to pivot, a data analyst wanting to level up, or a complete beginner, this guide will show you exactly what you need to learn and in what order.

Let’s dive in.

Introduction: Why 2025 is the Best Time to Become a Data Engineer

Eight years ago, when I started my journey as a data engineer, the field looked completely different. We were still debating whether Hadoop would dominate forever, and “cloud-native” was just a buzzword most companies were afraid to embrace.

Fast forward to 2025, and I can confidently say: this is the golden age of data engineering.

Why? Because the data engineer roadmap has never been clearer, the tools have never been more powerful, and the demand has never been higher. Companies aren’t just collecting data anymore—they’re drowning in it. They need people who can turn that chaos into insights, and they’re willing to pay handsomely for it.

What Does a Data Engineer Actually Do?

Before we jump into the data engineer roadmap, let’s clear up what this job actually involves. I’ve met too many aspiring engineers who think it’s all about writing SQL queries or building dashboards. That’s just scratching the surface.

The Real Day-to-Day

As a data engineer, you’re the architect and plumber of the data world. Your job is to:

- Design and build data pipelines that move data from source systems (APIs, databases, SaaS tools) into data warehouses or lakes

- Transform raw data into clean, structured formats that analysts and data scientists can actually use

- Ensure data quality and reliability—because bad data leads to bad decisions

- Optimize performance and costs—a poorly designed BigQuery table can cost thousands of dollars a month

- Build scalable systems that grow with the business

A Real GCP Example

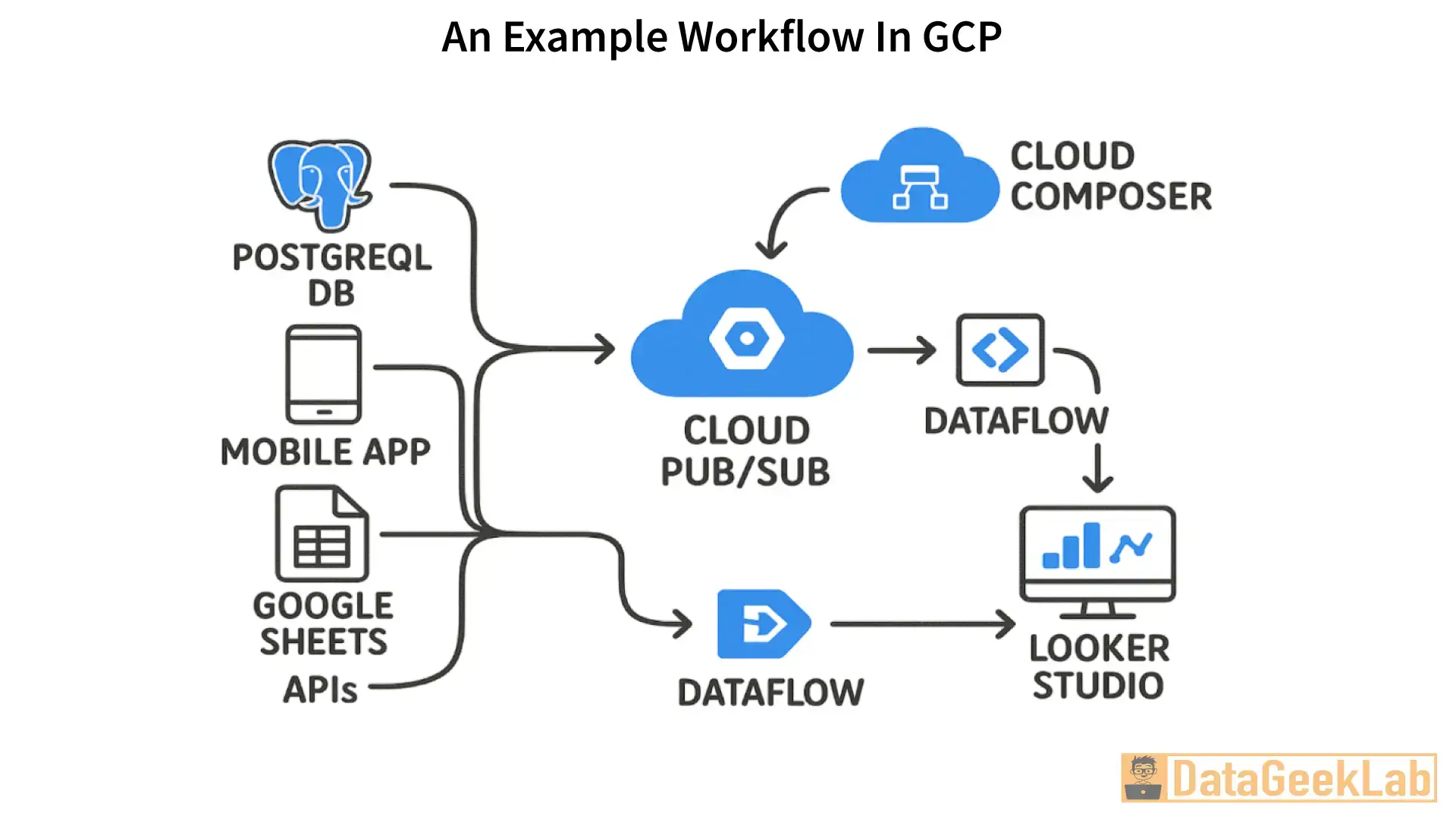

Let me paint you a picture. Last year, I worked on a project for an e-commerce company. They had order data in a PostgreSQL database, user behavior events streaming from their mobile app, and marketing data sitting in Google Sheets and third-party APIs.

My job? Build a unified data platform on GCP where all this information flows together.

The pipeline used Cloud Pub/Sub for real-time event streaming, Dataflow for processing and transformation, BigQuery as the data warehouse, Cloud Composer for orchestration, and Cloud Functions for lightweight data ingestion.

This is what modern data engineering looks like. You’re not just writing scripts—you’re designing entire ecosystems.

Core Technical Skills You Need to Master

Alright, let’s talk about the skills for data engineer roles that actually matter. I’m going to be brutally honest about what’s essential versus what’s nice-to-have.

1. SQL: Your Most Powerful Weapon

I cannot stress this enough: SQL is the foundation of everything. I still use SQL every single day, and I’m a Lead Engineer. You need to master complex joins, window functions, CTEs, performance optimization, and BigQuery-specific features like partitioning, clustering, and array operations.

Pro tip: I've interviewed dozens of candidates, and the difference between junior and senior engineers often shows up in their SQL. Learn to write queries that are not just correct, but performant and maintainable.

2. Python: The Swiss Army Knife

Python is the de facto language for data engineering. You’ll use it for writing ETL/ELT scripts, building data pipelines with Apache Airflow, working with APIs, data transformation with pandas, and interacting with GCP services via client libraries.

3. Data Modeling

Understanding how to structure data is critical. You need to know star schema vs snowflake schema, normalization vs denormalization, Slowly Changing Dimensions, and fact/dimension tables.

Real example: I once redesigned a BigQuery dataset that was using heavily normalized tables (think 15+ joins for a single report). By denormalizing and using nested/repeated fields, we reduced query costs by 70% and improved performance by 10x.

4. Cloud Platform Knowledge (GCP Focus)

Since you’re targeting a GCP data engineer role, you need deep knowledge of BigQuery, Dataflow, Cloud Composer, Cloud Pub/Sub, Cloud Storage, Cloud Functions/Run, and IAM & Security.

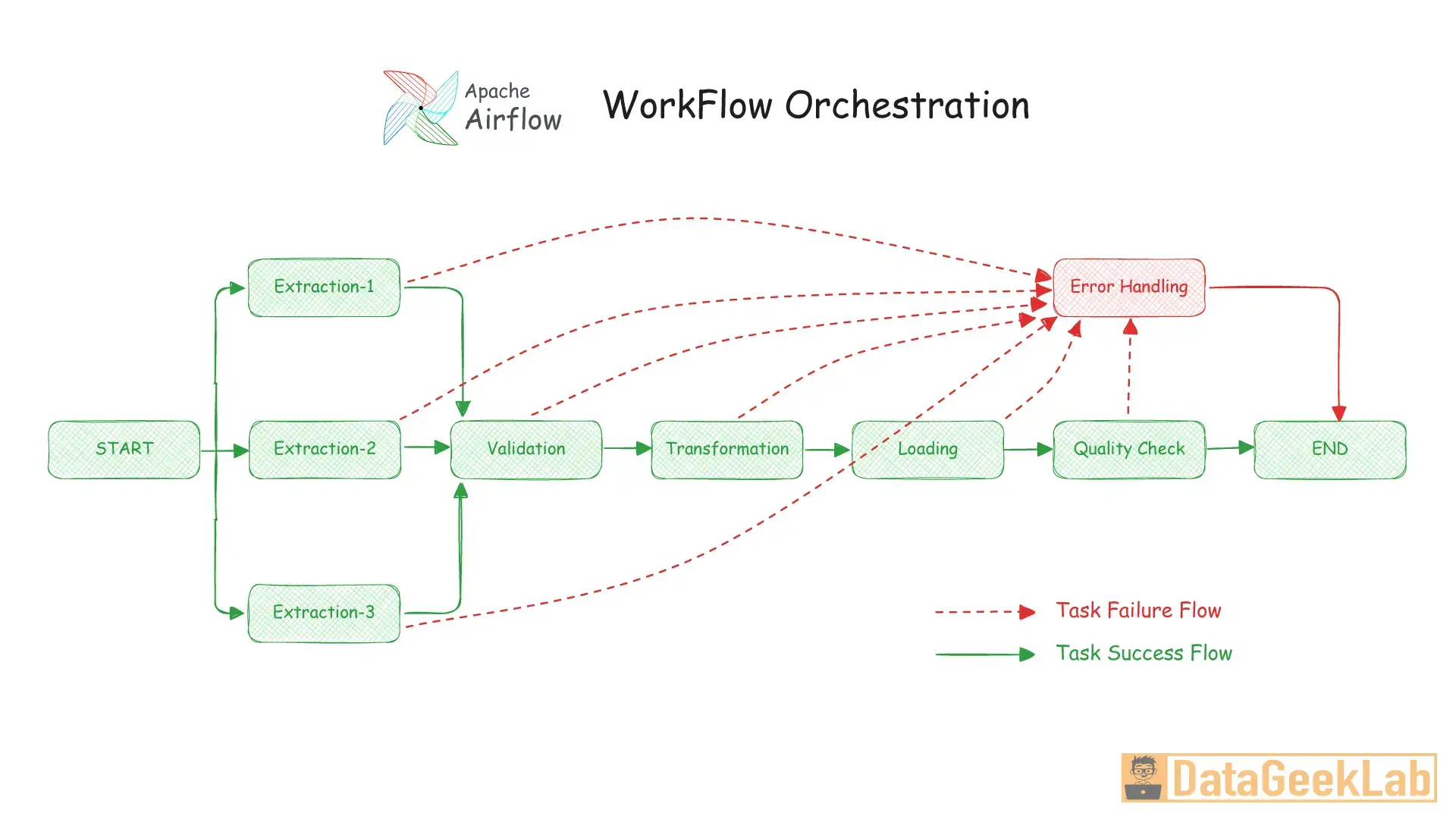

5. Workflow Orchestration

Learning Apache Airflow changed my career. It’s the industry standard for orchestrating data pipelines. You need to understand DAG design, task dependencies, XComs, error handling, and backfilling.

6. Version Control and CI/CD

You need to know Git, pull requests and code reviews, CI/CD pipelines (Cloud Build, GitHub Actions), and Infrastructure as Code using Terraform.

Understanding the Modern Data Stack

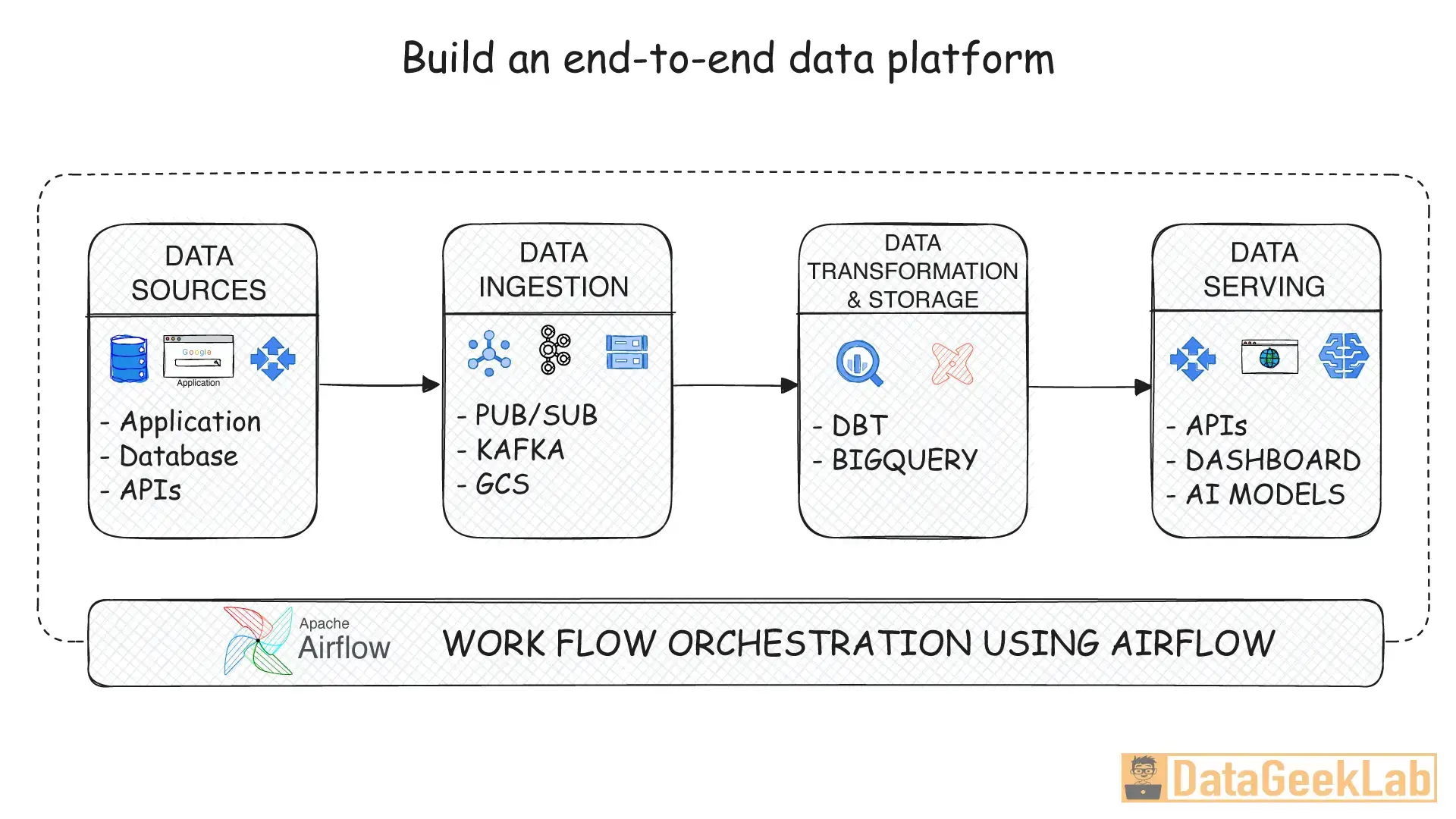

The modern data stack has revolutionized how we build data platforms. Let me break down the key components based on my experience.

The modern data stack consists of six layers: Data Sources (databases, SaaS apps, event streams), Ingestion (Dataflow, Pub/Sub—ELT not ETL), Storage (BigQuery + Cloud Storage), Transformation (dbt, Dataform, Dataflow), Orchestration (Cloud Composer), and Analytics (Looker, Looker Studio).

My workflow: I use dbt for 90% of transformations. It's like version control for your SQL logic.

Essential Tools and Technologies

Let me give you the data engineer tools I actually use, organized by priority:

Must-Learn (Foundation)

- SQL (any dialect, then specialize in BigQuery)

- Python (3.9+)

- Git & GitHub

- Linux/Unix command line

- BigQuery

Should-Learn (Professional Level)

- Apache Airflow / Cloud Composer

- dbt (data build tool)

- Dataflow / Apache Beam

- Docker

- Terraform

- Cloud Pub/Sub

Nice-to-Have (Advanced)

Spark, Kafka, Kubernetes, Scala/Go, BigQuery ML

Your path doesn't need to take 8 years. With focused learning, you can compress this into 18-24 months.

Your Learning Roadmap: Beginner to Advanced

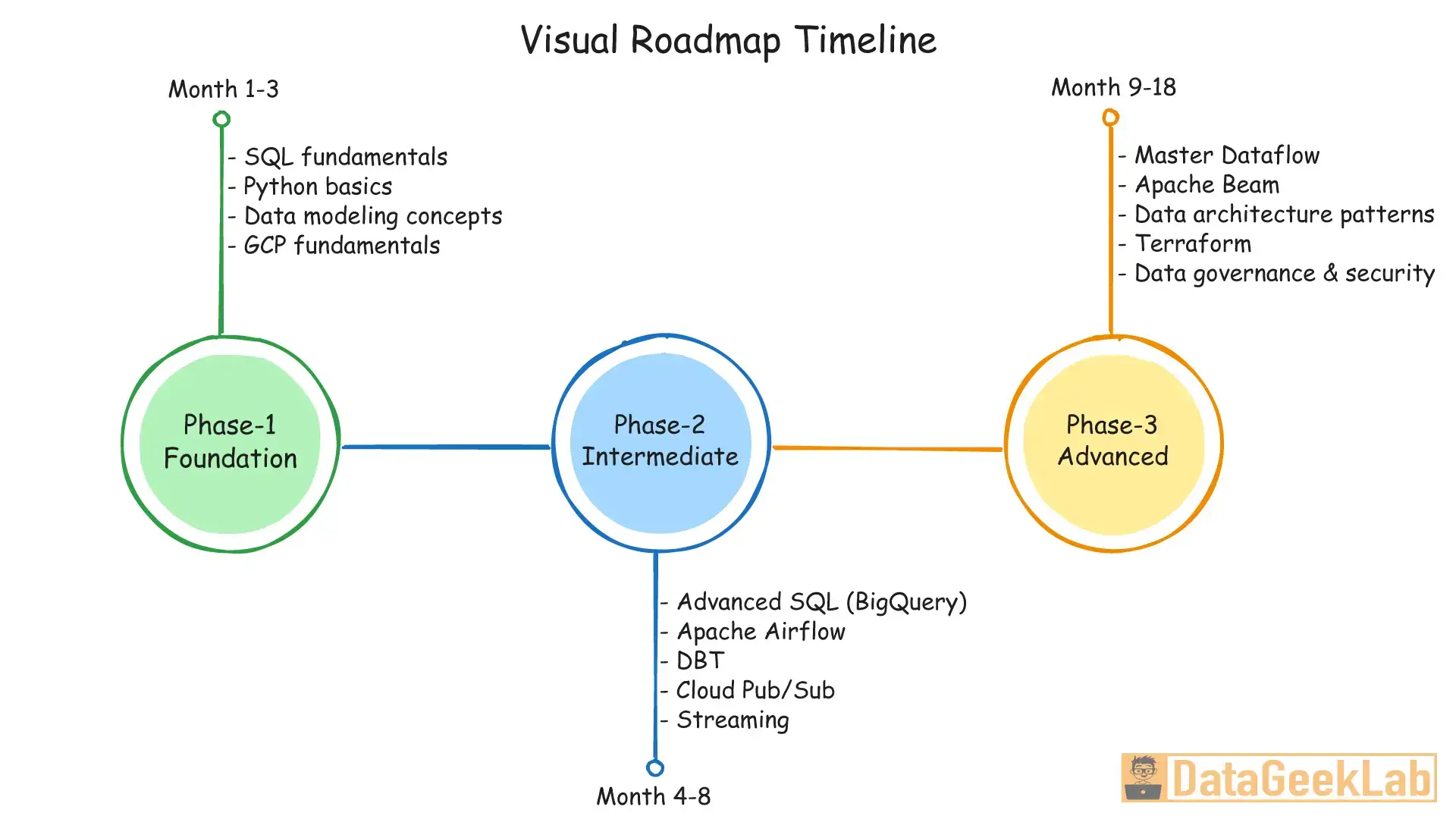

Phase 1: Foundation (Months 1-3)

Goal: Build a solid technical foundation

Focus 40% on SQL fundamentals, 30% on Python basics, 20% on data modeling concepts, and 10% on GCP fundamentals.

Milestone project: Build a simple ETL pipeline that extracts data from a public API, transforms it using Python/pandas, loads it into BigQuery, and creates a dashboard in Looker Studio.

Phase 2: Intermediate (Months 4-8)

Goal: Build real-world data engineering skills

Learn advanced SQL (BigQuery-specific), Apache Airflow, dbt, Cloud Pub/Sub and streaming, and version control workflows.

Milestone project: Build an end-to-end data platform with multiple data sources, Cloud Composer orchestration, dbt transformations, data quality checks, and CI/CD deployment.

Phase 3: Advanced (Months 9-18)

Goal: Become a senior-level engineer

Master Dataflow and Apache Beam, data architecture patterns, Infrastructure as Code with Terraform, performance and cost optimization, and data governance & security.

Real-World Lessons from 8 Years on GCP

Let me share some hard-earned wisdom from real GCP projects I’ve worked on.

Lesson 1: BigQuery Cost Optimization is Critical

The mistake: In my second year, I built a reporting pipeline that ran hourly, scanning 2 TB per run at $5 per TB = $7,200/month.

The fix: Implemented partitioning by date (reduced to ~50 GB), added clustering, used materialized views. Final cost: $200/month for the same insights.

Takeaway: Always partition and cluster your BigQuery tables. Use the query validator to check scan sizes before running.

Lesson 2: Idempotency Saves Your Life

Make all pipelines idempotent—they should produce the same result no matter how many times you run them. Use MERGE statements instead of INSERT, implement deduplication logic, and add unique identifiers to all records.

Lesson 3: Monitor Data Quality, Not Just Pipeline Success

A pipeline ran successfully for weeks, but we’d been loading incomplete data due to an API pagination bug. Implement row counts, null checks, value range validation, schema drift detection, and freshness checks using dbt tests, Great Expectations, and custom Airflow sensors.

Lesson 4: Design for Failure

Everything will fail eventually. Implement exponential backoff for API calls, set appropriate timeouts, use Airflow retries, design circuit breakers, and create actionable alerting.

Lesson 5: ELT > ETL in the Cloud

Load raw data into BigQuery quickly, then transform using SQL in dbt. This increased development speed 3x and made data analysts self-sufficient.

Lesson 6: Documentation is a Force Multiplier

Document architecture diagrams, data dictionaries, runbooks, and decision logs using dbt (auto-generated docs), Confluence/Notion, and diagrams.net.

Common Mistakes to Avoid

- ❌ Over-Engineering Early: Start simple. Add complexity only when you need it.

- ❌ Ignoring Data Lineage: Track where data comes from and where it goes from day one.

- ❌ Not Testing Before Production: Write unit tests, test with sample data, use dev/staging/prod environments.

- ❌ Hard-Coding Everything: Use Secret Manager for credentials, store config in Airflow Variables or Cloud Storage.

- ❌ Neglecting Monitoring: Implement monitoring at every layer: pipeline execution, data quality, cost, and performance.

- ❌ Not Learning the Fundamentals: Master SQL first. Everything else builds on this foundation.

- ❌ Copying Production Data to Dev: Anonymize/mask sensitive data, create synthetic test datasets, follow GDPR/CCPA compliance.

Your Actionable Data Engineer Roadmap Checklist

Foundation Skills

- Complete a comprehensive SQL course (4-6 weeks)

- Learn Python basics and pandas (4 weeks)

- Understand data modeling (star schema, normalization)

- Create a GCP account and complete BigQuery tutorials

- Build your first ETL pipeline (API → Python → BigQuery)

Core Data Engineering

- Master BigQuery (partitioning, clustering, optimization)

- Learn Apache Airflow (DAGs, operators, scheduling)

- Complete dbt tutorial and build transformation models

- Implement version control with Git

- Build an end-to-end pipeline with orchestration

Cloud and Streaming

- Learn Cloud Pub/Sub for event streaming

- Build your first Dataflow pipeline

- Understand streaming vs batch processing

- Implement real-time data ingestion

Production-Ready Skills

- Learn Infrastructure as Code (Terraform)

- Implement CI/CD for data pipelines

- Add data quality testing

- Set up monitoring and alerting

- Optimize for cost and performance

Professional Development

- Build 3-5 portfolio projects on GitHub

- Get GCP Professional Data Engineer certification

- Contribute to open-source projects (Airflow, dbt, Beam)

- Write blogs or give talks about your learnings

- Network with other data engineers

FAQs

1. Is data engineering a good career in 2025?

Absolutely. The demand for data engineers continues to outpace supply. Data engineer roles have grown 35% year-over-year, with median salaries ranging from $120K-$180K for mid-level positions and $180K-$250K+ for senior roles.

2. How long does it take to become a data engineer?

From my experience mentoring engineers: Complete beginner to entry-level: 6-12 months of focused learning. Entry-level to mid-level: 2-3 years of hands-on experience. Mid-level to senior: 3-5 additional years. If you’re coming from software engineering or data analytics, you can compress this timeline significantly.

3. Do I need a computer science degree to become a data engineer?

No. While a CS degree helps, it’s not mandatory. What matters more: demonstrable skills, portfolio projects, and problem-solving ability. I’ve worked with excellent data engineers from mathematics, business analytics, self-taught, and bootcamp backgrounds.

4. Should I learn AWS or GCP for data engineering?

Both are valuable, but specialize in one first. I recommend GCP for data engineering because BigQuery is arguably the best cloud data warehouse, GCP’s data tools are more integrated and user-friendly, and it has great community and documentation.

5. Is Airflow still relevant, or should I learn newer orchestration tools?

Airflow is still the industry standard and isn’t going anywhere. It has the largest community, most extensive integrations, enterprise adoption (Cloud Composer is managed Airflow), and is proven at massive scale. Learn Airflow first.

6. What’s the difference between a data engineer and a data analyst?

Data engineers build the infrastructure; data analysts use it. Data engineers build pipelines, design databases, ensure data quality, and optimize performance. Data analysts query data, create reports, generate insights, and communicate findings.

7. How important is real-time streaming vs batch processing?

It depends on your use case. Batch processing (90% of use cases): Daily reports, analytics, machine learning training. Streaming (10% of use cases): Real-time dashboards, fraud detection, live recommendations. Start with batch processing—add streaming only when business requirements demand it.

Conclusion: Start Your Data Engineering Journey Today

Eight years ago, I took a leap into data engineering without really knowing what I was getting into. If I could go back and give myself advice, it would be this:

Start now. Build things. Break things. Learn from failures.

The data engineer roadmap isn’t about perfection—it’s about consistent progress. You don’t need to know everything before you start. You just need to know enough to build your first pipeline, then your second, then your tenth.

The field of data engineering is evolving rapidly, and that’s exciting. BigQuery, Dataflow, Cloud Composer—these tools are getting better every year, making it easier to build powerful data platforms.

Build your first data pipeline this week. It doesn’t have to be complex. Extract data from a public API, load it into BigQuery, and create a simple visualization. That single project will teach you more than weeks of tutorials.

Then build another one. And another one. Document them on GitHub. Write about what you learned. Share your struggles and successes.

Before you know it, you’ll look back and realize you’ve become a data engineer.

The data engineer learning path is challenging, but it’s also incredibly rewarding. You’ll build systems that power business decisions, enable machine learning, and create value at scale.

Start your data engineering journey today. Follow this roadmap, stay curious, and remember: every expert was once a beginner who didn’t give up.

See you in the data pipelines. 🚀

Want to dive deeper into specific topics? Check out these related posts: BigQuery Performance Optimization, Building Production-Ready Airflow DAGs, What data Engineers Actually do, and Cost Optimization Strategies for GCP Data Platforms.

Found this guide helpful? Share it with someone starting their data engineering journey. Let's build a stronger data engineering community together.

Written by a Lead Data Engineer with 8 years of hands-on experience building scalable data platforms on Google Cloud Platform. All examples and lessons are from real-world projects.